Web scraping at scale in 2026 means confronting sophisticated anti-bot systems that analyze everything from your IP address to how you move your mouse. Rotating proxies have become the foundation of any successful data extraction operation because they distribute requests across hundreds or thousands of IP addresses, making it nearly impossible for target websites to detect and block your scraper.

This isn't theoretical. Enterprise scrapers processing millions of requests daily rely on rotating residential proxies to maintain consistent access to pricing data, product catalogs, and market intelligence. The difference between a blocked scraper and a reliable data pipeline often comes down to how intelligently you rotate your IPs.

Before diving into technical implementation, a note on responsible use: web scraping should focus on publicly available data, respect robots.txt directives, and comply with website terms of service. The techniques discussed here support legitimate business intelligence, competitive analysis, and market research—not circumventing access controls on private or restricted content.

How Rotating Proxies Actually Work

A rotating proxy service maintains a pool of IP addresses and automatically assigns a different IP for each request—or after a specified time interval. When you send a request, it passes through a backconnect gateway server that selects an IP from the pool before forwarding your traffic to the target website.

The rotation happens through three primary mechanisms:

Per-request rotation assigns a fresh IP address for every single connection. This approach works well for high-volume scraping where you need maximum anonymity and don't require session persistence.

Time-based rotation switches IPs after a set duration (typically 1-30 minutes). This is useful when you need to maintain some consistency while still avoiding detection patterns.

Sticky sessions preserve the same IP for extended periods, which is essential for multi-step workflows like logging into accounts, navigating checkout processes, or paginating through search results.

The technical implementation involves a proxy pool manager that tracks healthy IPs, a rotation algorithm that decides which proxy handles each request, and error handling that marks blocked IPs for temporary exclusion and recovery.

Why Residential IPs Outperform Datacenter Proxies for Protected Sites

The choice between residential and datacenter proxies significantly impacts your ability to collect data from protected websites. Based on aggregated industry performance data, residential proxies typically maintain success rates between 85% and 95% when accessing sites with bot protection. Datacenter proxies, however, often see their success rates decline to the 20-40% range against the same targets—a gap that compounds costs through failed requests and retries.

Why such a dramatic difference? Residential IPs originate from contracts between ISPs and actual households, carrying the trust signals that websites associate with genuine visitors. Datacenter IPs share identifiable characteristics—common subnet ranges, hosting provider ASNs, and IP registration patterns—that sophisticated security systems recognize and filter as a category.

That said, datacenter proxies still serve a purpose. They're significantly faster (100-1000 Mbps vs. 10-100 Mbps for residential) and cheaper ($0.10-0.50 per IP monthly vs. $2-15 per GB for residential). For scraping publicly available datasets, government records, or sites without aggressive bot protection, datacenter proxies offer better cost efficiency.

The practical approach for most operations involves a hybrid strategy: route low-risk requests through datacenter IPs to minimize costs, then switch to residential proxies when encountering blocks or targeting protected e-commerce platforms, social media sites, or travel aggregators.

Modern Anti-Bot Detection: What You're Up Against

Websites in 2026 deploy multi-layered defenses that go far beyond simple IP blocking. Understanding these systems helps you design rotation strategies that actually work.

IP reputation scoring tracks historical behavior associated with each address. A residential IP with years of normal browsing activity carries more trust than a freshly provisioned datacenter IP. Some security systems share blacklists across networks, meaning an IP banned on one major retailer might already be flagged elsewhere.

TLS fingerprinting analyzes the parameters transferred during the encryption handshake—cipher suites, extensions, and protocol versions create unique signatures. Standard HTTP libraries produce different fingerprints than real browsers, which anti-bot systems detect instantly.

Behavioral analysis monitors mouse movements, scrolling patterns, keystrokes, and timing between actions. Bots that interact too efficiently—clicking instantly, scrolling at constant speeds, never pausing—trigger detection algorithms trained on millions of real user sessions.

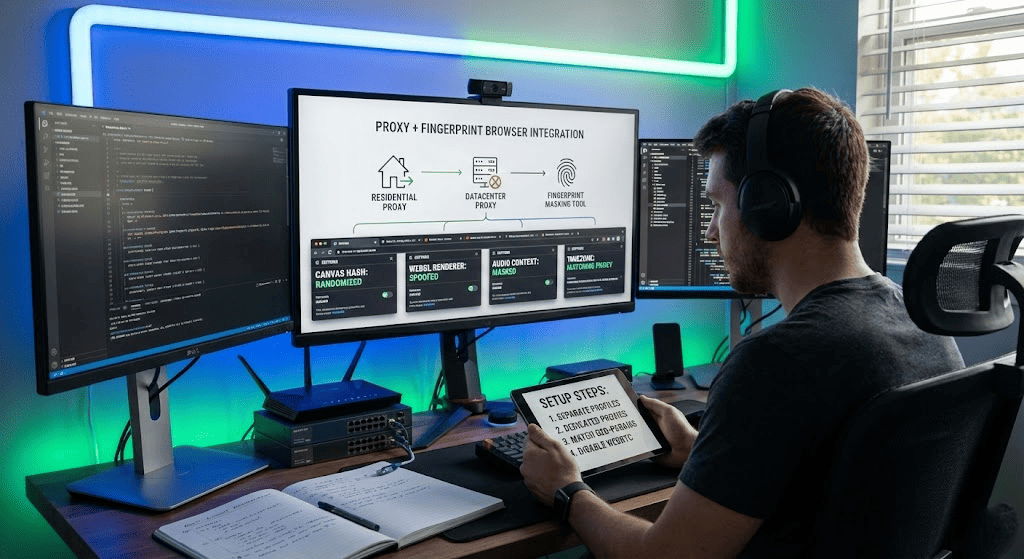

Browser fingerprinting collects dozens of client-side signals: screen resolution, installed fonts, WebGL renderer, audio context, canvas rendering. Each creates a unique identifier that persists even when you rotate IPs. If multiple sessions share identical fingerprints but come from different IP addresses, that pattern itself becomes a detection signal.

Effective proxy rotation addresses only one layer of this stack. Maintaining reliable data access on well-protected sites requires combining IP rotation with browser-grade TLS configurations, consistent fingerprint profiles, and request timing that mirrors organic user behavior.

Rotation Strategies That Actually Scale

Random proxy selection—picking any IP from the pool for each request—is the simplest approach, but it has limitations. If your rotation algorithm doesn't account for network topology, you might inadvertently send consecutive requests through IPs that share the same network block or autonomous system number. Security systems track these patterns and can flag traffic that rotates within a narrow IP range while claiming to represent different users. Better implementations verify that each selected proxy differs meaningfully from recent selections in terms of network origin and geography.

Weighted rotation assigns scores to each proxy based on recent performance. IPs with high success rates get selected more frequently, while those returning errors or blocks are temporarily penalized. The weight calculation typically factors in:

Time since last use (recently used proxies get lower priority)

Success rate against specific targets

Response latency patterns

Proxy type (residential IPs often get bonus weight)

Subnet diversity from the previously used IP

Adaptive rotation monitors response signals and adjusts rotation frequency dynamically. If you start receiving CAPTCHAs or 429 rate limit errors, the system automatically rotates more aggressively. When responses flow smoothly, it can maintain sessions longer to reduce overhead.

For session-dependent workflows, the key is knowing when consistency matters. Price monitoring scripts typically need per-request rotation because each page fetch is independent. Account management or checkout testing requires sticky sessions to maintain authentication state across multiple requests.

Concurrency and Rate Limiting: The Overlooked Factor

Rotating IPs doesn't solve the problem if you're still hammering a single domain with excessive parallel requests. Even with different source IPs, hundreds of simultaneous connections to one website create abnormal traffic patterns.

Most successful scrapers implement per-domain concurrency limits. Running 10-20 parallel requests to a target while distributing traffic across your rotation pool appears more natural than 200 simultaneous connections—even if each comes from a different IP.

Request timing matters equally. Adding randomized delays between 500-3000 milliseconds simulates real browsing behavior. Constant-interval requests (exactly every 1000ms, for example) produce detectable patterns. Variable delays with occasional longer pauses mimic how actual users read content and decide what to click next.

When blocks occur, immediate retries from a new IP often compound the problem. Anti-bot systems recognize rapid-fire requests from different IPs hitting the same endpoint as clear automation signals. Implementing exponential backoff—waiting progressively longer between retry attempts—gives your rotation pool time to recover and reduces the chance of escalating blocks.

Measuring What Matters

Proxy performance benchmarks should track metrics that directly impact your data collection:

Success rate (2xx/3xx responses vs. errors) tells you whether your rotation strategy is working. Rates below 90% for residential proxies or 70% for datacenter suggest configuration issues or blacklisted IP ranges.

Challenge frequency measures how often you encounter CAPTCHAs or JavaScript challenges. Rising challenge rates indicate detection, even if requests technically succeed.

Cost per successful record combines proxy expense with retry overhead. An expensive residential proxy that succeeds on the first attempt often costs less than cheap datacenter IPs requiring multiple retries.

Latency by region and pool type identifies slow IP ranges affecting your throughput. Geographic proximity between your proxy and target servers significantly impacts response times.

Logging these metrics per target domain reveals which sites require residential IPs versus where datacenter proxies perform adequately. This data drives cost optimization over time.

Implementation Considerations

The technical architecture for production-grade proxy rotation involves several components working together. Your proxy pool needs health checking—regularly testing IPs against known endpoints to identify blocked or dead proxies before they cause request failures.

Session management becomes critical when mixing rotation strategies. You need mechanisms to pin specific sessions to sticky IPs while allowing other traffic to rotate freely. Most providers expose this through session parameters in the proxy authentication string.

Error handling should distinguish between temporary blocks (retry with new IP), permanent blocks (remove IP from pool), and infrastructure issues (network timeouts, provider outages). Each error type requires different recovery logic.

For teams running continuous data pipelines, treating the proxy layer as infrastructure engineering—not just a tool toggle—makes the difference between reliable feeds and constant firefighting. That means version-controlled configuration, monitoring dashboards, and documented policies for which proxy types serve which targets.

When Rotating Proxies Aren't Enough

Some scenarios require capabilities beyond what proxy rotation alone provides. JavaScript-heavy sites that load content dynamically need headless browsers, not just HTTP requests through proxies. Sites using advanced bot management solutions may require additional measures like consistent browser profiles and realistic interaction patterns alongside IP rotation.

Managed scraping APIs that bundle proxy rotation with JavaScript rendering and request orchestration can simplify these challenges. The tradeoff is reduced control and higher per-request costs compared to self-managed proxy infrastructure.

For straightforward data collection needs—price monitoring, search result tracking, public dataset extraction—rotating residential proxies with proper rotation logic handle most targets effectively without additional complexity.

Get Started with Proxy001 Residential Proxies

If you're building data collection infrastructure that requires reliable access to public web data at scale, Proxy001 offers rotating residential proxies designed for consistent, high-success-rate scraping operations. Our residential IP pool spans global locations with flexible rotation options—per-request, time-based, or sticky sessions—to match your specific workflow requirements.

With transparent bandwidth pricing and no traffic expiration, you pay only for what you use without worrying about monthly caps going to waste. Our residential IPs provide the authentic ISP footprints necessary for reliable access to e-commerce platforms, travel sites, and search engines where datacenter proxies often struggle with rate limits.

Explore Proxy001's residential proxy plans and see how proper IP rotation infrastructure can transform your data collection reliability.