Not every residential proxy provider that advertises millions of IPs actually delivers millions of usable IPs. The gap between marketing claims and engineering reality is where most procurement mistakes happen — and where a structured evaluation framework earns its keep.

After working with enterprise data teams and independent researchers who burn through proxy budgets faster than they'd like, a pattern emerges: the teams that validate providers systematically before signing annual contracts consistently report fewer mid-project disruptions, lower cost overruns, and higher data quality. The teams that rely on review roundups and free-tier impressions tend to discover problems at scale, when switching costs are highest.

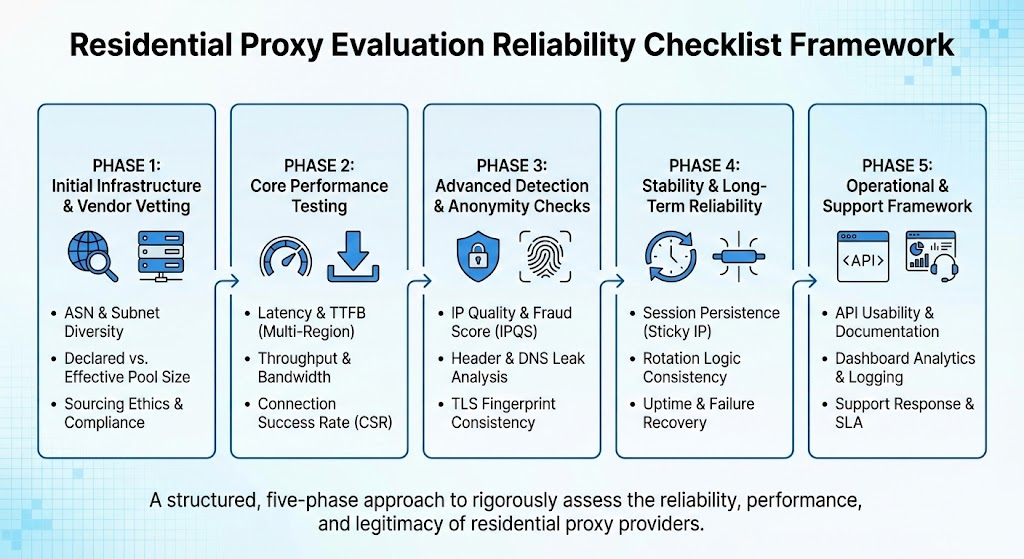

This article presents a reliability-oriented framework for evaluating residential proxy providers. It is not a ranked list of vendors. It is a structured methodology you can apply to any provider on your shortlist — including those you've never heard of.

Why Residential Proxy Evaluation Requires Its Own Framework

Evaluating residential proxies is not the same as evaluating datacenter proxies or VPN services. The residential proxy vs datacenter distinction matters here because the reliability variables are fundamentally different.

Datacenter IPs are owned by hosting companies. Their quality is relatively stable and predictable — you can inspect ASN ownership, check subnet reputation, and estimate detection risk with reasonable confidence. Residential IPs, by contrast, originate from real ISP-assigned addresses on consumer devices. Their quality fluctuates based on factors outside the provider's direct control: how IPs are sourced, how frequently the pool refreshes, whether devices are online, and whether the consent chain behind each IP is legitimate.

This creates evaluation dimensions that don't apply to other proxy types. You need to verify not just speed and uptime, but IP authenticity, geolocation accuracy, fraud-score cleanliness, ethical sourcing practices, and session behavior under real workloads. A provider can deliver excellent latency numbers while serving IPs that are already flagged across major anti-fraud databases — which is worse than no proxy at all for compliance-sensitive operations.

Dimension 1: IP Pool Quality and Authenticity

The headline number — "100 million IPs" or "72 million IPs" — tells you almost nothing about what you'll actually receive when you send requests. Pool size is a marketing metric. Pool quality is an engineering metric, and the two frequently diverge.

What to verify during a trial:

Start by requesting a sample of 50–100 IPs from your target geography. For each IP, run it through at least two independent classification services. IPQualityScore (IPQS) and Scamalytics are widely used for this purpose; IP2Location and Spur provide complementary data points. You're looking for several things simultaneously.

First, confirm the IP is actually classified as residential. Providers sometimes mix datacenter or hosting IPs into residential pools — either intentionally to pad inventory or due to poor pool hygiene. Anti-fraud systems like IPQS categorize connection types and flag datacenter IPs masquerading as residential. If more than 5–10% of your sample returns as "hosting" or "datacenter" classification, that's a significant quality concern.

Second, check fraud scores. IPQS assigns scores from 0 to 100, where scores at or above 75 suggest the IP is likely associated with proxy or VPN activity, and scores at or above 90 indicate high-risk behavior patterns. For legitimate business applications like ad verification, competitive price monitoring, or SEO research, you want the majority of your sample scoring below 75. A pool dominated by high-fraud-score IPs will trigger CAPTCHAs, blocks, and behavioral challenges on target sites — defeating the purpose of using residential proxies in the first place.

Third, check blocklist presence. Cross-reference a sample against Spamhaus and similar databases. IPs appearing on active blocklists have been associated with abuse, and their inclusion in a residential pool signals inadequate monitoring by the provider.

Dimension 2: Geolocation Accuracy

Geolocation mismatch is one of the most common — and most expensive — proxy quality problems. A provider claims to offer city-level targeting in Houston, but the IP actually resolves to a Dallas subnet according to MaxMind, or worse, to a different state entirely.

Research from Carnegie Mellon's Internet Measurement Conference demonstrated that roughly one-third of proxy server locations studied could not be verified as matching their advertised countries, with servers instead concentrated in hosting-friendly regions like the Netherlands, Germany, and the Czech Republic. While that study focused on VPN-style proxies, the underlying problem applies to residential pools as well: geolocation databases disagree, IPs get reassigned across regions, and providers don't always catch the drift.

How to test this systematically:

For each target geography you plan to use, request 20–30 IPs and check their reported location against at least two independent geolocation databases. MaxMind GeoIP2 is the industry standard with independently verified accuracy rates reaching approximately 99% at country level and 80–90% at city level. Cross-reference against IP2Location or ipapi.is for a second opinion.

Focus on three things: country-level agreement (both databases should return the same country), city-level consistency (within 50 kilometers of your target), and ISP classification (the ISP field should show a legitimate consumer broadband provider, not a hosting company or mobile carrier, unless you specifically requested mobile IPs).

If you're running geo-sensitive operations — localized pricing audits, regional ad verification, location-specific SERP tracking — insist on verifying geolocation accuracy before committing to a paid plan. Most providers offering a proxy free trial give enough bandwidth to run this validation.

Dimension 3: Performance Under Realistic Load

Benchmark numbers published on provider websites are measured under ideal conditions. Your conditions are not ideal. The only performance data that matters is data you collect yourself, against your actual target endpoints, at your expected request volume.

Key metrics to capture:

Time to first byte (TTFB) measures how quickly the proxy establishes a connection and begins returning data. For residential proxies, TTFB typically ranges from 500ms to 3 seconds depending on geography and target site complexity. Anything consistently above 4 seconds suggests pool congestion or poor routing.

Success rate measures the percentage of requests that return valid responses (HTTP 200 or expected status codes) versus errors, timeouts, or blocks. The best residential proxy providers in the market report 95–99%+ success rates, but these numbers are measured against their own test endpoints. Run your tests against your actual targets. If you're collecting public e-commerce data, test against e-commerce sites. If you're doing SERP research, test against search engines. Success rates vary dramatically by target.

Connection stability matters especially for session-dependent tasks. If you need sticky sessions — maintaining the same IP across multiple sequential requests — test how long sessions actually persist versus how long the provider claims they persist. Some providers advertise 30-minute sticky sessions but the underlying IP disconnects after 8–12 minutes because the residential device went offline. Understanding sticky vs rotating proxies and when each mode applies to your use case is essential before testing.

A minimal benchmark protocol:

Send 200 requests through rotating residential proxies to your primary target endpoint, recording TTFB, HTTP status code, and response body hash for each request. Then repeat with sticky sessions if applicable. Calculate: median TTFB, 95th-percentile TTFB, success rate, unique IP count (to estimate effective pool depth for your geography), and session duration distribution for sticky mode.

Run this same protocol against two or three shortlisted providers. The comparative data will reveal differences that no review article can predict, because performance is target-dependent and geography-dependent.

Dimension 4: Ethical Sourcing and Compliance

This dimension is increasingly non-negotiable for enterprise buyers, and it should matter to everyone. Residential proxy IPs come from real people's internet connections. The question is whether those people knowingly and willingly opted in.

The industry has made meaningful progress here. The Ethical Web Data Collection Initiative (EWDCI), an international consortium led by the Internet Infrastructure Coalition (i2Coalition), has established a certification program with core principles around legality, ethics, ecosystem engagement, and social responsibility. Founding and certified members include several major providers who have committed to transparent sourcing practices. The EWDCI Certified designation signals that a provider adheres to agreed-upon standards for ethical data collection, though the certification is still voluntary and not universally adopted.

What to look for in a provider's sourcing practices:

Consent transparency is the baseline. The provider should clearly explain how IPs enter their pool. The strongest model involves a dedicated application (sometimes called a proxyware or bandwidth-sharing app) where users explicitly opt in, understand that their bandwidth will be used for proxy traffic, and receive compensation. Several major providers operate this model through companion applications where end users voluntarily participate in exchange for payment.

KYC (Know Your Customer) processes for buyers are equally important. Providers who verify the identity of customers purchasing proxy access — and restrict usage to legitimate business purposes — reduce the risk that the network gets used for abuse, which in turn protects IP pool quality for all customers. If a provider has no KYC process and no acceptable use policy, the pool is more likely to contain IPs with elevated fraud scores and blocklist presence.

Look for documented compliance with GDPR, CCPA, and other data protection regulations relevant to your operating jurisdictions. Ask whether the provider has undergone any third-party security audits or holds certifications like ISO 27001 or AppEsteem.

Dimension 5: Session Control and Rotation Flexibility

How a provider handles IP rotation and session management directly impacts whether you can complete your specific tasks reliably.

Rotating residential proxies assign a new IP for each request (or at configurable intervals). This is ideal for high-volume data collection where you want maximum IP diversity and minimal fingerprint accumulation. The key evaluation question for rotation is effective pool depth: how many unique, usable IPs can you actually access in your target geography within a given time window? A provider might claim rotating residential proxy unlimited bandwidth access, but if the effective pool for your target city is only 2,000 IPs, you'll cycle through them quickly and start encountering repeat addresses — increasing detection risk.

Sticky sessions maintain the same IP for a defined duration, which is critical for multi-step workflows like paginated data collection, authenticated session testing, or sequential form interactions. When evaluating sticky sessions, test the actual session duration. Request a 30-minute sticky session, then send requests at 2-minute intervals and log whether the IP remains consistent. Record the point at which the session breaks and a new IP is assigned.

Also evaluate how the provider handles session failures. Does it silently assign a new IP mid-session, return an error, or offer callback mechanisms to notify your application? The answer determines how much error-handling logic your integration code needs.

Dimension 6: Support, Documentation, and Trial Quality

Technical support quality is a trailing indicator of provider reliability. If documentation is thin and support response times are measured in days, expect similar responsiveness when you encounter production issues.

Evaluate before you buy:

Review the provider's developer documentation. It should include integration examples in common languages, clear endpoint specifications, authentication methods, error code references, and rate-limit guidelines. If learning how to use a residential proxy with a given provider requires guesswork, that's a negative signal.

Test support responsiveness during the trial period. Submit a technical question — something specific about session handling, geolocation targeting, or protocol support — and measure time to first response and quality of the answer. Pre-sales support is typically a provider's best support; if it's slow or vague at this stage, post-purchase experience will be worse.

Finally, evaluate trial conditions critically. Some providers offer generous free trials that represent production-quality service. Others throttle trial traffic, limit geography access, or serve from a smaller IP pool during trials — which means your evaluation data won't reflect paid-plan performance. Ask explicitly whether trial infrastructure matches production infrastructure.

Putting the Framework into Practice

The six dimensions above are not equally weighted for every use case. A team running large-scale competitive price intelligence will weight performance and rotation depth heavily. A compliance-focused enterprise will prioritize ethical sourcing and KYC verification. An SEO team doing periodic SERP audits may weight geolocation accuracy and cost efficiency above raw throughput.

The value of a structured framework is not that it produces a single "best" answer, but that it forces you to test claims against evidence before making commitments. Run a 48–72 hour evaluation period against your actual workloads. Document results in a comparison matrix. Share findings across your team.

The providers who welcome this level of scrutiny — and provide the trial access, documentation, and technical transparency to support it — are generally the ones worth working with long-term.

Get Started with Proxy001

If you're evaluating residential proxy options, Proxy001 offers 100M+ residential IPs across 200+ countries with city and state-level geo-targeting, flexible rotation and sticky session controls up to 180 minutes, and a 500MB free trial for new users to run your own validation tests. Whether you need rotating proxies for high-volume data collection or static residential IPs for session-dependent workflows, Proxy001's infrastructure is built for the kind of systematic evaluation described in this guide. Start your trial at proxy001.com and benchmark against your actual endpoints before committing.